EvalsOne

AI TestingTags

Introduction

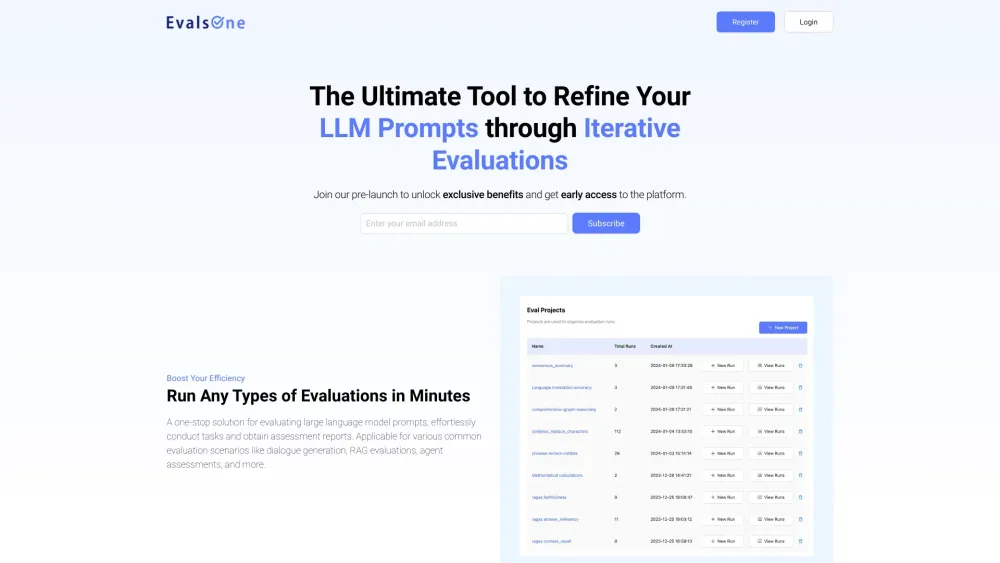

EvalsOne is a platform designed to streamline the process of prompt evaluation for generative AI applications. It provides a comprehensive suite of tools for iteratively developing and perfecting these applications, offering functionalities for evaluating LLM prompts, RAG flows, and AI agents. EvalsOne supports both rule-based and large language model-based evaluation methods, seamless integration of human evaluation, and various sample data preparation methods. It also offers extensive model and channel integration, along with customizable evaluation metrics.

How To Use

EvalsOne offers an intuitive interface for creating and organizing evaluation runs. Users can fork runs for quick iteration and in-depth analysis, compare template versions, and optimize prompts. The platform also provides clear and intuitive evaluation reports. Users can prepare evaluation samples using templates, variable value lists, OpenAI Evals samples, or by copying and pasting code from Playground. It supports various models and channels, including OpenAI, Claude, Gemini, Mistral, Azure, Bedrock, Hugging Face, Groq, Ollama, and API calls for local models, as well as integration with Agent orchestration tools like Coze, FastGPT, and Dify.

Pricing

| Packages | Pricing | Features |

|---|---|---|

| Free Edition | Free | Unlimited public repositories, limited private repositories |

| Team Edition | $4/user/month | Unlimited private repositories, basic features |

| Enterprise Edition | $21/user/month | Advanced security and auditing features |